About

This MCP server exposes the AWS Cost Explorer and Bedrock model‑invocation logs APIs to Claude, allowing users to query and visualize AWS spend data in real time with conversational prompts. It supports cross‑account access through assumed IAM roles.

Capabilities

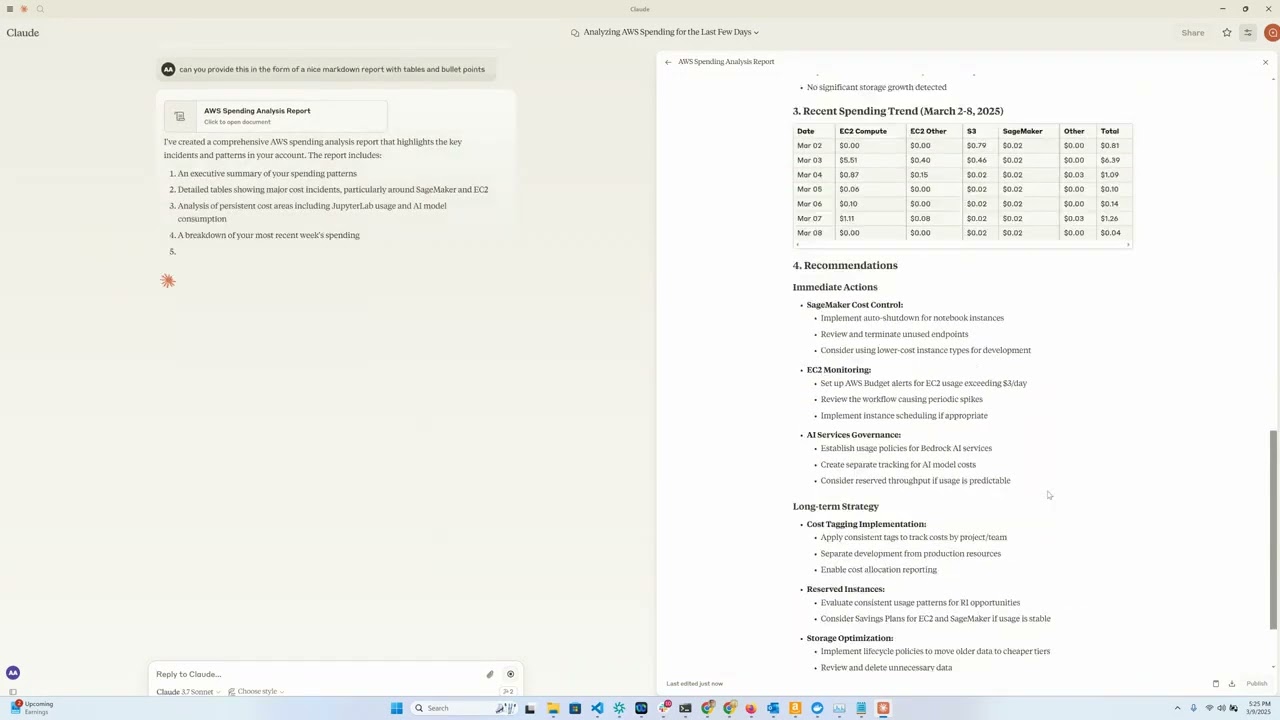

The AWS Cost Explorer MCP Server bridges the gap between raw cloud‑billing data and conversational AI by exposing AWS Cost Explorer and Bedrock usage logs through Anthropic’s Model Context Protocol. Developers can now ask Claude natural‑language questions about spending, usage trends, and cost drivers without writing complex queries or dashboards. The server translates those conversational intents into precise API calls to Cost Explorer and CloudWatch Logs, returning structured results that the assistant can interpret and present contextually.

At its core, the server provides a unified interface for two critical data sources: AWS Cost Explorer, which offers granular cost and usage metrics across all services, and Amazon Bedrock model invocation logs stored in CloudWatch. By pulling data from both sources, the MCP server enables cross‑analysis—such as correlating Bedrock model usage with associated compute costs—to help teams understand the financial impact of AI workloads. This capability is especially valuable for organizations that run multiple Bedrock models or rely on AI services that generate significant cloud spend.

Key capabilities include:

- Time‑range queries: Retrieve daily, monthly, or custom‑period cost breakdowns.

- Service and region filtering: Drill down into specific AWS services or geographic regions to spot anomalies.

- Bedrock usage insights: Aggregate model calls by user, region, or model type and map them to cost metrics.

- Role‑assumption support: The server can assume IAM roles in other AWS accounts, allowing a single instance to aggregate spend across an enterprise.

- Secure HTTPS deployment: When hosted on EC2 or another cloud host, the MCP server can be exposed over TLS to meet security best practices.

In real‑world scenarios, a finance team might query, “What was the total EC2 spend in the last week for our staging environment?” and receive an instant, formatted answer. A data science lead could ask, “Which Bedrock model consumed the most resources in the last month?” and see a cost‑by‑model chart. When integrated into a LangGraph agent, the MCP server can feed dynamic cost data into workflow logic—e.g., pausing experiments that exceed a budget threshold or alerting on unexpected spikes.

The server’s design aligns with MCP’s goal of decoupling AI assistants from direct API integrations. Developers can run the server locally for quick iteration or deploy it remotely on EC2, leveraging IAM roles to access multiple accounts. Because the MCP client is already embedded in Claude Desktop and LangGraph, adding cost‑analysis capabilities requires minimal effort—just point the client to the server’s endpoint. This seamless integration empowers teams to embed cost‑awareness into AI workflows, fostering responsible cloud usage and tighter budget control.

Related Servers

MarkItDown MCP Server

Convert documents to Markdown for LLMs quickly and accurately

Context7 MCP

Real‑time, version‑specific code docs for LLMs

Playwright MCP

Browser automation via structured accessibility trees

BlenderMCP

Claude AI meets Blender for instant 3D creation

Pydantic AI

Build GenAI agents with Pydantic validation and observability

Chrome DevTools MCP

AI-powered Chrome automation and debugging

Weekly Views

Server Health

Information

Tags

Explore More Servers

Mkslides MCP Server

Generate HTML slides from Markdown via Model Context Protocol

iRacing MCP Server

Connect iRacing data to Model Context Protocol

Buildkite MCP Server

Expose Buildkite pipelines to AI tools and editors

Figma to Vue MCP Server

Generate Vue 3 components from Figma designs instantly

Cornell Resume MCP Server

Auto-generate Cornell-style notes and questions, sync to Notion

GitMCP

Turn any GitHub repo into a live AI documentation hub