About

The Tencent Cloud COS MCP Server provides an easy-to-use MCP protocol interface that allows large language models to upload, download, and manage files in COS, as well as access a suite of image, video, and document AI capabilities without writing code.

Capabilities

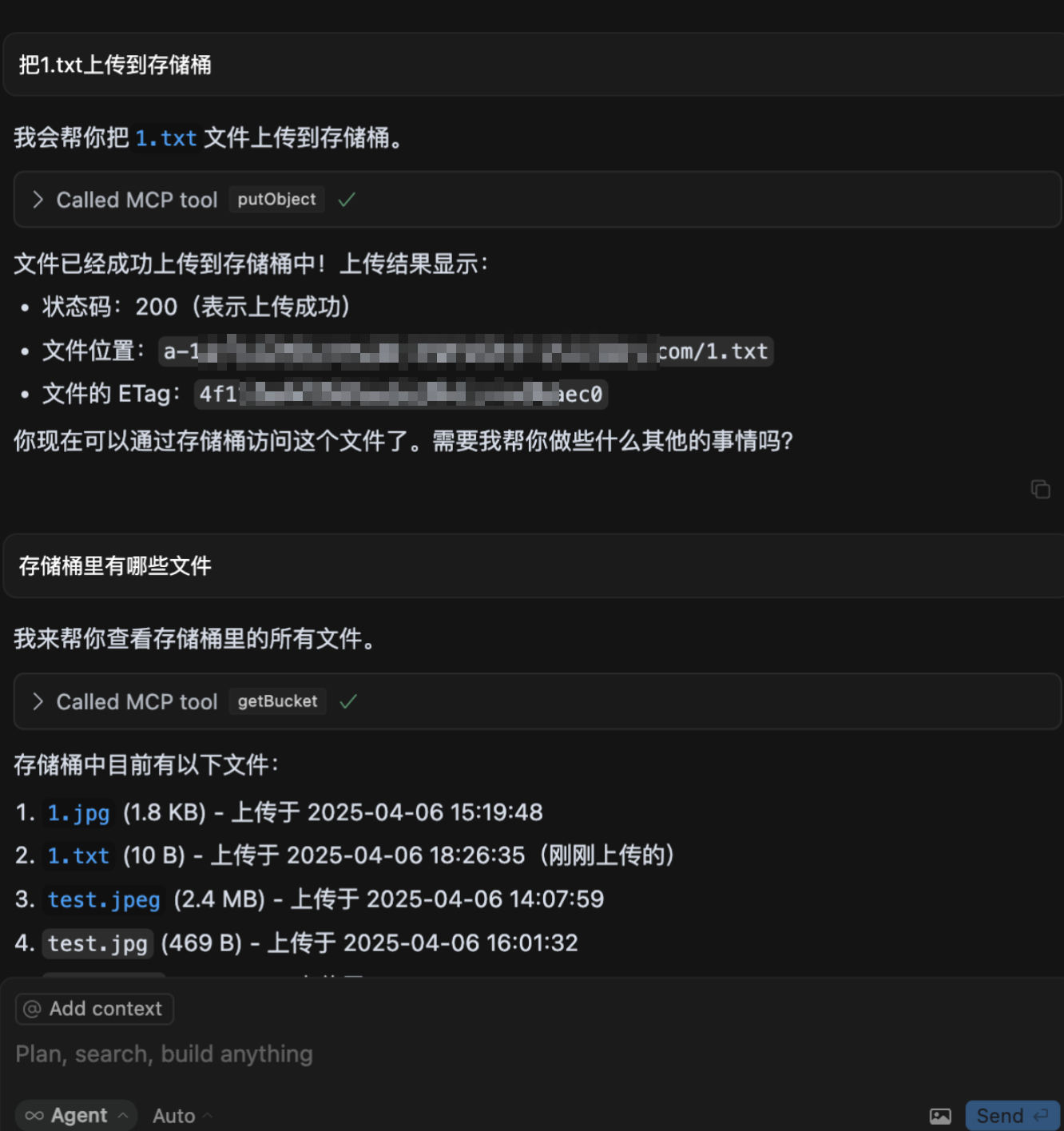

The Tencent Cloud COS MCP Server is a ready‑to‑use bridge that lets large language models interact with the Tencent Cloud Object Storage (COS) and the Data Intelligence service (CI) without writing custom code. By exposing a standard MCP interface, it removes the friction of authentication, SDK integration and API orchestration that developers normally face when connecting a model to cloud storage. The server automatically handles credential validation, bucket selection and region routing, allowing the assistant to upload, download or list files in seconds.

Core capabilities revolve around two pillars: cloud storage and cloud‑based media processing. For storage, the server supports uploading files, downloading them back to local or model memory, and enumerating bucket contents—essential for data ingestion pipelines, backup workflows or serving assets to downstream services. The processing layer unlocks a suite of AI‑powered image and video utilities: metadata extraction, quality assessment, super‑resolution, cropping, QR code recognition, watermarking, and even natural‑language image search via MateInsight. Video functions include thumbnail extraction and frame capture. These operations are all offloaded to Tencent’s CI APIs, freeing the model from heavy computation while still delivering rich content analysis.

Typical use cases include automated media ingestion—a model scrapes a web page, pulls images or videos, processes them (e.g., upscales or tags), and pushes the results to COS for long‑term storage. Developers can also use it for data backup: quick snapshots of local datasets are uploaded to the cloud, guaranteeing durability and accessibility across regions. The natural‑language search feature is valuable for building knowledge bases or asset libraries where users can query images by description rather than file name.

Integration into AI workflows is straightforward. The server exposes a single MCP endpoint that the model can call with JSON payloads describing actions and parameters. The server returns structured responses, enabling the assistant to chain operations—upload a file, then request a thumbnail, finally store metadata—all within a single prompt. Because the server can run in SSE or stdio mode, it fits seamlessly into both local development environments and cloud‑hosted assistants like Claude or Cursor.

What sets the COS MCP Server apart is its zero‑code, zero‑configuration philosophy for developers familiar with MCP. By bundling COS and CI functionalities behind a unified protocol, it eliminates the need to manage separate SDKs or worry about API keys in model code. The result is a lightweight, secure, and highly productive tool that accelerates the creation of AI‑driven data pipelines on Tencent Cloud.

Related Servers

MindsDB MCP Server

Unified AI-driven data query across all sources

Homebrew Legacy Server

Legacy Homebrew repository split into core formulae and package manager

Daytona

Secure, elastic sandbox infrastructure for AI code execution

SafeLine WAF Server

Secure your web apps with a self‑hosted reverse‑proxy firewall

mediar-ai/screenpipe

MCP Server: mediar-ai/screenpipe

Skyvern

MCP Server: Skyvern

Weekly Views

Server Health

Information

Tags

Explore More Servers

Sensei MCP

Your Dojo and Cairo development mentor on Starknet

Chain of Draft (CoD) MCP Server

Efficient, rapid LLM reasoning with minimal token usage

Brave Search MCP Server

Secure, privacy‑first web search for Zed contexts

C++ Builder MCP Server

Compile and analyze C++ DLLs with MSBuild and dumpbin

Challenge Server Bungee Plugin

Enhance your Minecraft server with dynamic challenges on BungeeCord

Joern MCP Server

Secure code analysis via Joern-powered MCP