About

n8n is a no-code/low-code workflow automation engine that lets technical teams build, run, and extend automations using JavaScript/Python, a rich library of integrations, and native AI capabilities—all while keeping full control over data and deployments.

Capabilities

Overview of the n8n MCP Server

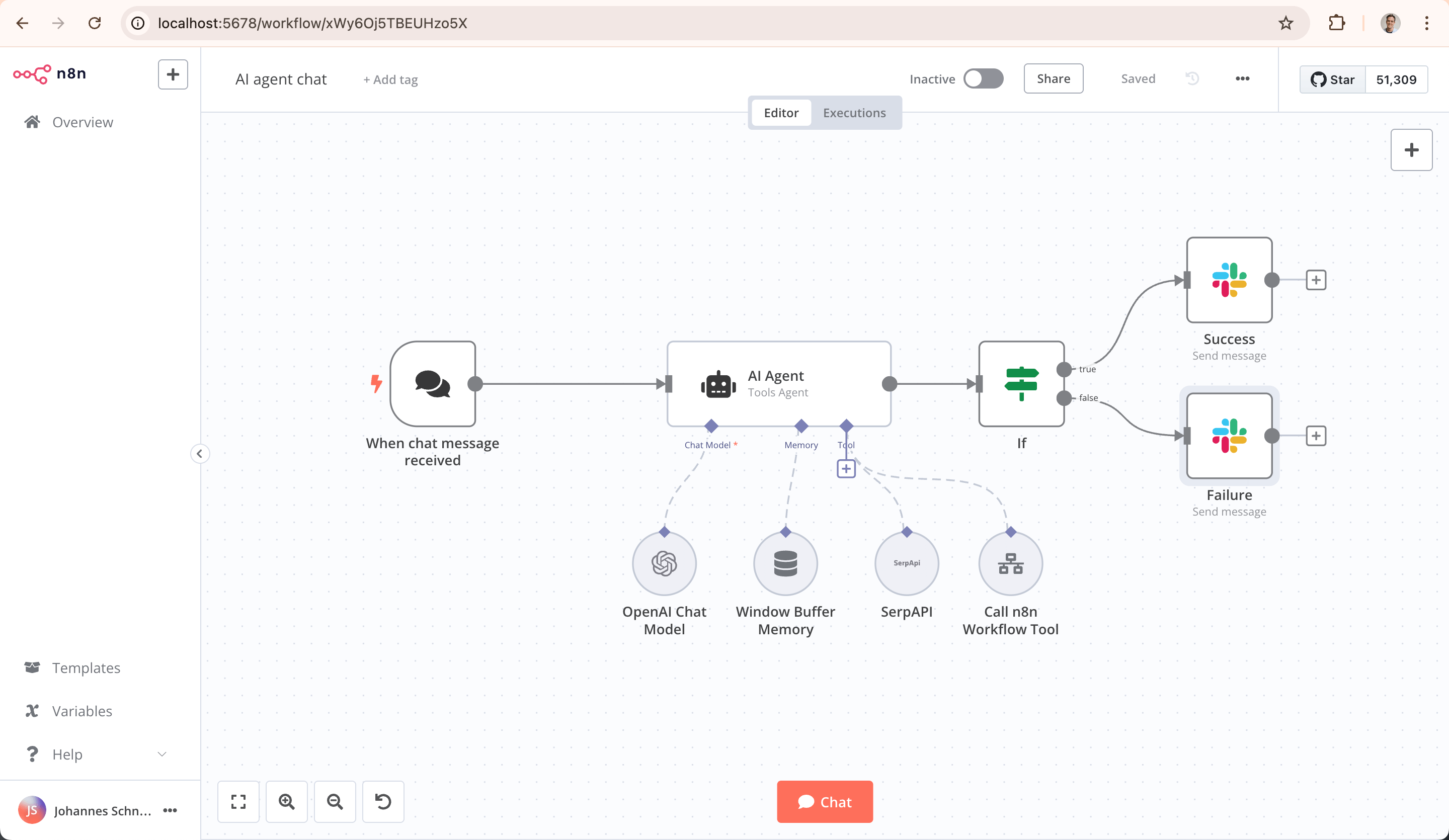

The n8n MCP server bridges AI assistants with a powerful, self‑hostable workflow automation platform. It solves the common pain point of integrating disparate services and custom code into AI‑driven processes without sacrificing data ownership or control. By exposing n8n’s rich set of 400+ connectors, visual editor, and AI‑native capabilities through the Model Context Protocol, developers can let Claude or other assistants trigger complex orchestrations, fetch data from cloud services, and even run custom JavaScript or Python snippets—all while keeping the entire workflow inside a secure, on‑premises environment.

At its core, n8n provides a flexible execution engine that blends low‑code visual design with the freedom to inject bespoke logic. The MCP server offers resources that represent workflow definitions, tools that expose individual nodes (e.g., HTTP requests, database queries, or custom scripts), and prompt templates that guide the AI on how to compose calls. This duality allows developers to build reusable, parameterized workflows that the AI can invoke with minimal context. For example, an assistant could ask to “retrieve the latest sales report,” and the MCP server would translate that into a pre‑defined n8n workflow that queries a database, formats the data, and returns it to the user.

Key capabilities include AI‑native node support through LangChain integration, enabling assistants to chain multiple AI calls with domain data and external APIs. The server’s fair‑code license means the source is always available, and developers can self‑host or use a managed cloud offering. Enterprise features such as fine‑grained permissions, SSO, and air‑gapped deployments make it suitable for regulated industries. The extensive community ecosystem—over 900 ready‑to‑use templates and a vibrant forum—ensures that new use cases can be prototyped quickly.

Typical real‑world scenarios involve automating customer support workflows, generating dynamic reports, or orchestrating CI/CD pipelines. An AI assistant can trigger a “deploy to staging” workflow that checks code quality, runs tests, and updates deployment environments—all while the MCP server handles authentication, logging, and error handling. Because n8n can run arbitrary JavaScript or Python, developers can embed custom logic directly within the workflow, giving AI assistants the ability to perform computations or data transformations that would otherwise require additional services.

In summary, the n8n MCP server empowers developers to fuse AI assistants with a robust, self‑hostable automation platform. It delivers seamless integration of external APIs, custom code, and AI logic while preserving data sovereignty, offering a compelling solution for teams that need both speed and control in their AI‑augmented workflows.

Related Servers

FastMCP

TypeScript framework for rapid MCP server development

Activepieces

Open-source AI automation platform for building and deploying extensible workflows

MaxKB

Enterprise‑grade AI agent platform with RAG and workflow orchestration.

Filestash

Web‑based file manager for any storage backend

MCP for Beginners

Learn Model Context Protocol with hands‑on examples

Inbox Zero

AI‑powered email assistant that organizes, drafts, and tracks replies

Weekly Views

Server Health

Information

Tags

Explore More Servers

ChatGPT X Deepseek X Grok X Claude Linux App

Native desktop wrappers for leading AI chat platforms on Linux

Comment Stripper MCP

Strip comments from code across languages

Code Explorer MCP Server

A lightweight notes system for Model Context Protocol

Graph Memory RAG MCP Server

In-memory graph storage for AI context and relationships

MCP RDF Explorer

Conversational SPARQL for local and endpoint knowledge graphs

MCP Reasoner

Advanced reasoning for Claude with Beam Search and MCTS