About

The QueryPie MCP Server empowers administrators to manage their QueryPie instance via a lightweight, Docker‑friendly service. It offers instant dashboards, real‑time disk and memory monitoring, log analysis, and extensible asset management.

Capabilities

The QueryPie MCP server bridges the gap between an AI assistant and a live, managed database environment. By exposing QueryPie’s administrative API through the Model Context Protocol, it lets developers and data engineers use AI tools to perform routine monitoring, audit, and visualization tasks without leaving the conversational interface. This capability is especially valuable for teams that rely on AI assistants to streamline database operations, as it removes the need for separate dashboards or custom scripts.

At its core, the server provides a set of high‑level resources that map directly to common administrative functions: dashboard generation, resource usage monitoring, and security log analysis. When an AI assistant requests a chart or a memory‑usage snapshot, the MCP server translates that request into a QueryPie API call, retrieves the data, and returns it in a format ready for rendering. This eliminates boilerplate code, allowing developers to focus on business logic rather than plumbing.

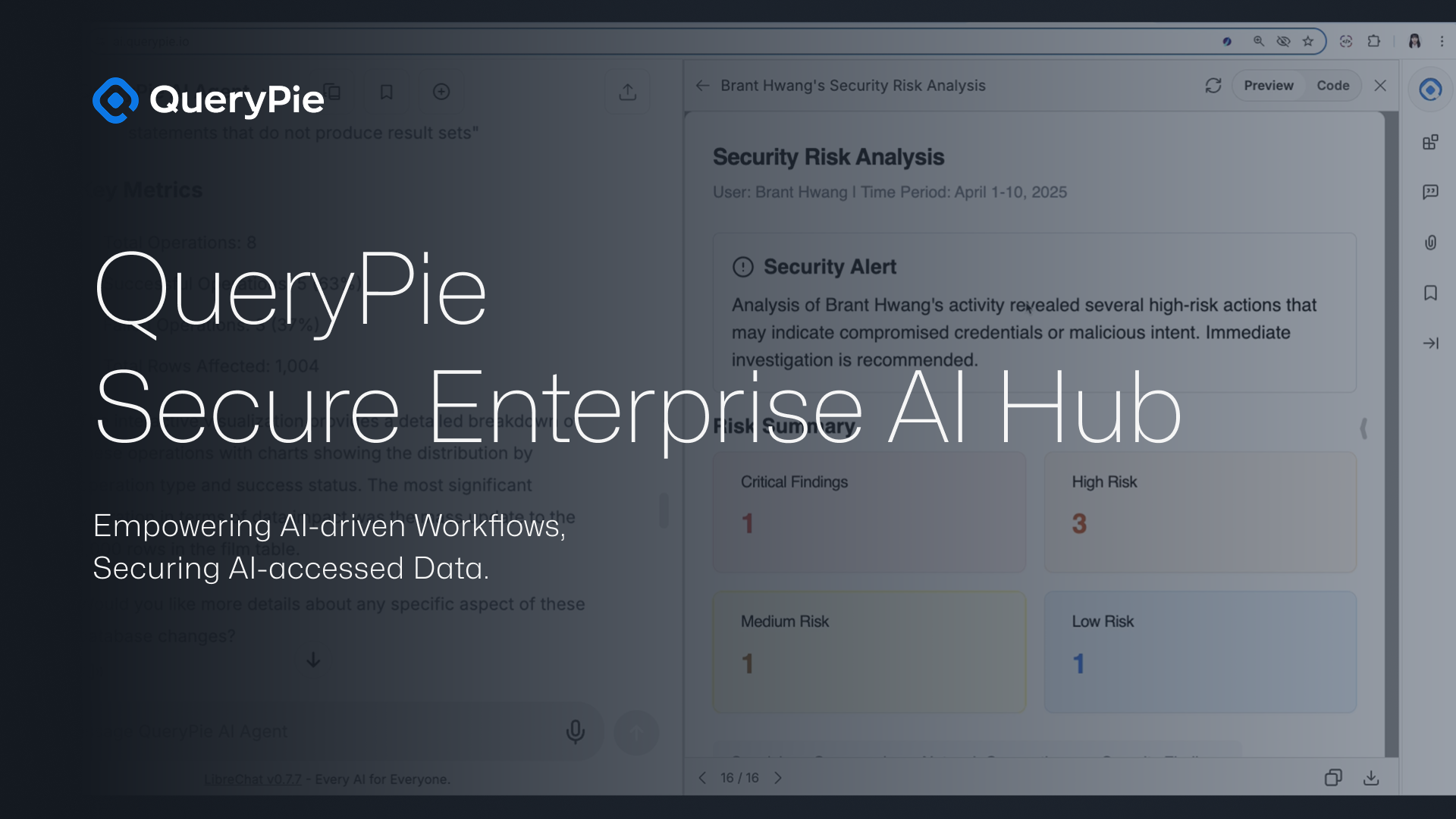

Key features include instant chart creation from query results, real‑time disk and memory usage dashboards, and automated detection of suspicious activity such as risky SQL statements or unauthorized access attempts. The server also supports asset registration, access control management, and audit automation—functions that are typically handled by separate tools. Because all interactions happen through the MCP interface, developers can chain these capabilities with other AI workflows, such as generating natural‑language explanations of anomalies or proposing remediation steps.

Real‑world scenarios that benefit from this MCP server are abundant. A data analyst can ask the AI assistant to “show me a live chart of today's query latency,” and the assistant will fetch, render, and display it instantly. A security officer might request a summary of recent abnormal login attempts; the server will parse logs, flag potential threats, and present them in a concise report. For operations teams, the ability to monitor resource consumption on demand helps preempt bottlenecks and plan capacity upgrades.

What sets QueryPie MCP apart is its tight integration with the existing QueryPie ecosystem. The server leverages proven authentication tokens, secure API endpoints, and built‑in visualization tools, ensuring that AI interactions remain consistent with the platform’s standards. Additionally, its support for both stdio and SSE transports offers flexibility in how AI clients communicate with the server, making it adaptable to a wide range of deployment environments—from local Docker containers to cloud‑hosted services.

Related Servers

MindsDB MCP Server

Unified AI-driven data query across all sources

Homebrew Legacy Server

Legacy Homebrew repository split into core formulae and package manager

Daytona

Secure, elastic sandbox infrastructure for AI code execution

SafeLine WAF Server

Secure your web apps with a self‑hosted reverse‑proxy firewall

mediar-ai/screenpipe

MCP Server: mediar-ai/screenpipe

Skyvern

MCP Server: Skyvern

Weekly Views

Server Health

Information

Explore More Servers

Púca MCP Server

LLM tools for OpenStreetMap data in one API

Mcptesting Server

A lightweight MCP server for testing repository setups

GrowthBook MCP Server

LLM‑enabled access to GrowthBook experiments and flags

SQL Server MCP for Claude

Natural language SQL queries via MCP

Solana Agent Kit

AI‑powered Solana automation for tokens, NFTs and DeFi

Mult Fetch MCP Server

Multi‑fetching AI assistant tool server