About

The Trieve MCP Server provides a unified API for semantic vector search, typo‑tolerant full‑text queries, sub‑sentence highlighting, content recommendations, and lightweight RAG services. It can be self‑hosted on VPCs or Kubernetes for secure, private AI workflows.

Capabilities

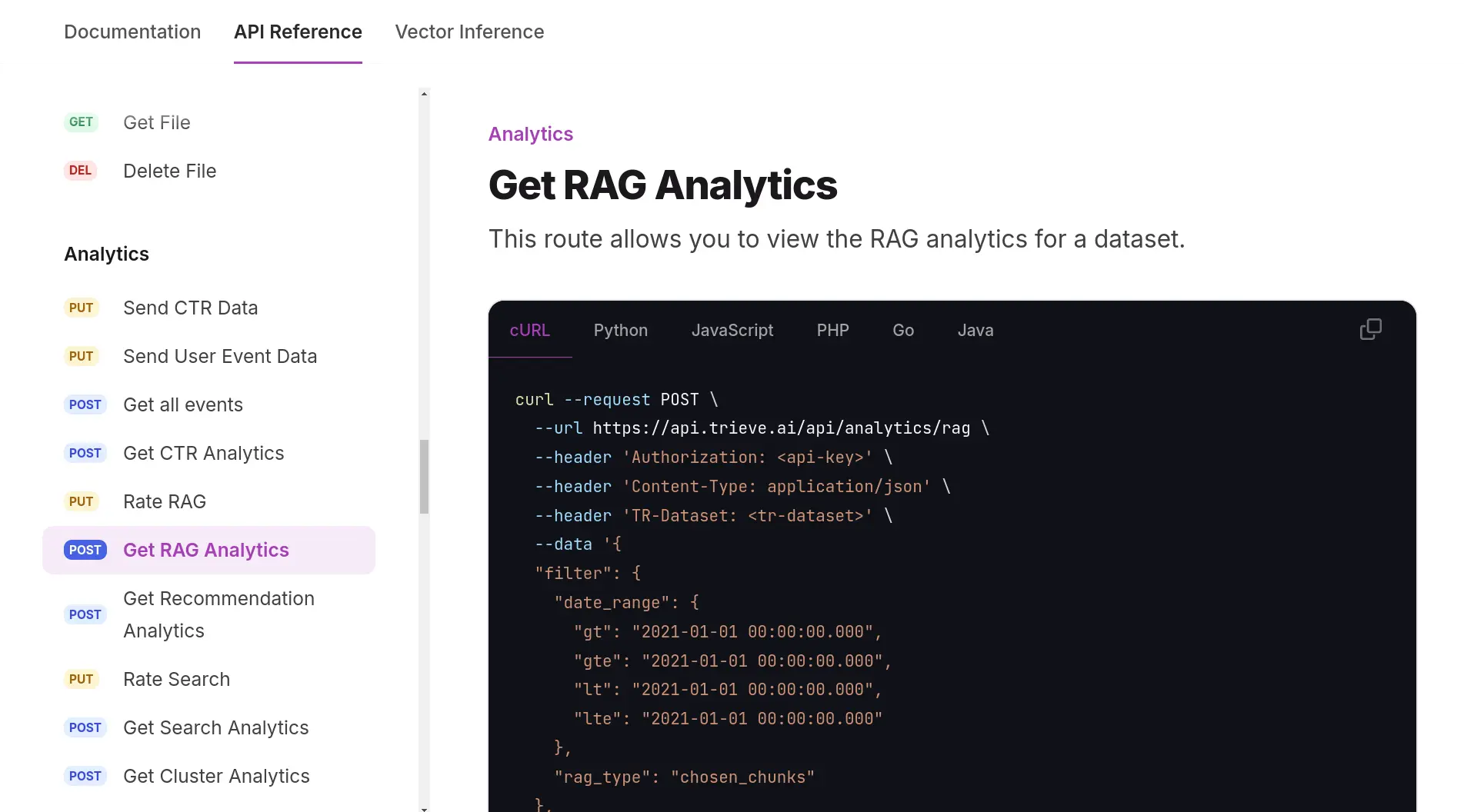

The Trieve MCP server turns any document collection into a fully‑featured semantic search and retrieval‑augmented generation (RAG) engine. By exposing vector‑search, typo‑tolerant text search, sub‑sentence highlighting, recommendation, and RAG endpoints through a single API, it solves the common pain point of stitching together disparate search back‑ends and LLM integrations. Developers can ingest PDFs, web pages, or any text into Trieve, and the server automatically creates dense vectors with OpenAI or Jina embeddings while also generating sparse neural vectors using naver/efficient-splade-VI-BT-large-query. This dual‑vector approach gives both high‑precision semantic recall and robust typo handling in a single query.

Trieve’s value lies in its out‑of‑the‑box support for the full RAG workflow. The server offers pre‑built routes that pair any LLM—via OpenRouter or a self‑hosted model—with topic‑based memory management, eliminating the need to build custom retrieval pipelines. The “generate_off_chunks” endpoint lets developers supply their own context, while the “create_message_completion_handler” streamlines multi‑turn conversations with automatic chunk selection. This makes it trivial to add intelligent chat, FAQ bots, or document‑aware assistants into existing applications.

Key capabilities include:

- Semantic dense vector search for precise intent matching.

- Sparse neural search that tolerates typos and spelling variations, improving user experience on noisy data.

- Sub‑sentence highlighting that pulls the exact matching text from a chunk and bolds it in results, giving users instant visual feedback.

- Recommendation API that surfaces similar chunks or grouped files, ideal for content recommendation engines.

- Self‑hosting on AWS, GCP, Kubernetes, or Docker Compose, giving enterprises full control over data and compliance.

- Bring‑your‑own‑model support, allowing teams to plug in custom embeddings or LLMs without code changes.

Real‑world use cases span knowledge bases, internal documentation search, e‑commerce product recommendation, and AI‑powered customer support. A SaaS platform can ingest user manuals and expose a search bar that returns highlighted snippets, while an enterprise chatbot can pull relevant policy documents on demand. Because Trieve exposes a clean MCP interface, any AI assistant—Claude, Gemini, or others—can request search results or RAG contexts with a single tool call, integrating seamlessly into existing workflows. The combination of robust search, recommendation, and RAG in one server gives developers a powerful, low‑maintenance foundation for building AI‑enhanced products.

Related Servers

Netdata

Real‑time infrastructure monitoring for every metric, every second.

Awesome MCP Servers

Curated list of production-ready Model Context Protocol servers

JumpServer

Browser‑based, open‑source privileged access management

OpenTofu

Infrastructure as Code for secure, efficient cloud management

FastAPI-MCP

Expose FastAPI endpoints as MCP tools with built‑in auth

Pipedream MCP Server

Event‑driven integration platform for developers

Weekly Views

Server Health

Information

Tags

Explore More Servers

IACR Cryptology ePrint Archive MCP Server

Programmatic access to cryptographic research papers

MCP Web Browser Server

Headless web browsing with Playwright-powered API

NPM Documentation MCP Server

Fast, cached NPM package metadata and docs

Buienradar MCP Server

Fetch 2‑hour precipitation forecasts by location

ASR Graph of Thoughts (GoT) MCP Server

Graph‑based reasoning for AI models via Model Context Protocol

Mcp Server Again

Re-implementing MCP server functionality in Python