About

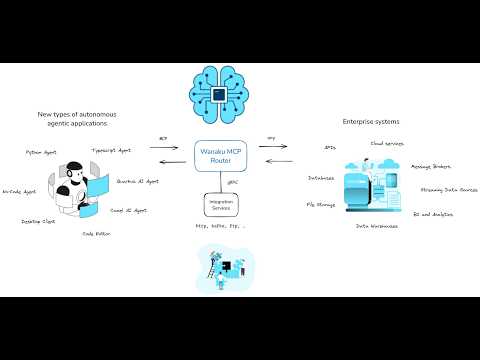

Wanaku is a Model Context Protocol (MCP) router that links AI-enabled applications, enabling seamless, standardized context sharing with LLMs. It serves as a central hub for routing contextual data across diverse AI services.

Capabilities

Overview

Wanaku is a Model Context Protocol (MCP) router designed to unify and streamline the way AI‑enabled applications expose context, tools, prompts, and sampling logic to large language models. By acting as a central hub, it solves the fragmented integration problem that developers face when connecting multiple data sources and toolchains to a single LLM. Instead of wiring each component directly into the model, Wanaku aggregates resources and presents them through a single MCP endpoint, simplifying deployment and maintenance.

At its core, the router accepts standard MCP requests—such as resource queries or tool invocations—and dispatches them to the appropriate backend service. It then normalizes responses into the MCP format before returning them to the client. This abstraction allows developers to swap underlying data stores or tooling without touching the LLM’s prompt logic, fostering a plug‑and‑play ecosystem. For teams building complex AI workflows, Wanaku eliminates boilerplate code and reduces coupling between components.

Key capabilities include:

- Resource routing: Exposes diverse data stores (databases, APIs, files) as MCP resources with uniform schemas.

- Tool orchestration: Enables LLMs to call external utilities (e.g., calculators, web scrapers) via a standardized tool interface.

- Prompt templating: Supports dynamic prompt generation and context injection, ensuring consistent formatting across applications.

- Sampling control: Provides fine‑grained sampling parameters (temperature, top‑k) that can be overridden per request.

These features make Wanaku ideal for scenarios such as knowledge‑base querying, real‑time data analysis, and multi‑step decision pipelines. For example, a customer support chatbot can retrieve product specifications from a database, call an external sentiment analysis tool, and adjust generation parameters based on user intent—all through a single MCP request.

Integration with AI workflows is straightforward: developers register their resources and tools with Wanaku, then configure the LLM client to point at the router’s MCP endpoint. The router handles authentication, request validation, and response formatting automatically. This seamless glue layer reduces cognitive load on developers, allowing them to focus on business logic rather than protocol plumbing.

Wanaku’s standout advantage lies in its open‑source, community‑driven design. By adhering to the MCP standard and providing a modular architecture, it encourages rapid iteration and sharing of best practices. Teams can contribute new resource adapters or tool plugins, enriching the ecosystem while keeping their own codebases lightweight. In short, Wanaku transforms a chaotic collection of AI components into a coherent, scalable service that accelerates product development and ensures consistent, high‑quality model interactions.

Related Servers

MarkItDown MCP Server

Convert documents to Markdown for LLMs quickly and accurately

Context7 MCP

Real‑time, version‑specific code docs for LLMs

Playwright MCP

Browser automation via structured accessibility trees

BlenderMCP

Claude AI meets Blender for instant 3D creation

Pydantic AI

Build GenAI agents with Pydantic validation and observability

Chrome DevTools MCP

AI-powered Chrome automation and debugging

Weekly Views

Server Health

Information

Tags

Explore More Servers

Monzo MCP Server

Banking data access via Claude tools

Tempo MCP Server

Query Grafana Tempo traces via the Model Context Protocol

MCP Subfinder Server

JSON‑RPC wrapper for ProjectDiscovery subfinder

Linear Issues MCP Server

Read‑only access to Linear issues for LLMs

Mcp Rust CLI Server Template

Rust-based MCP server for seamless LLM integration

Langfuse MCP Server

Debug AI agents with Langfuse trace data via MCP