About

An MCP server that lets you upload video files or URLs, retrieve processing templates, create customizable tasks, and track progress through the ZapCap API.

Capabilities

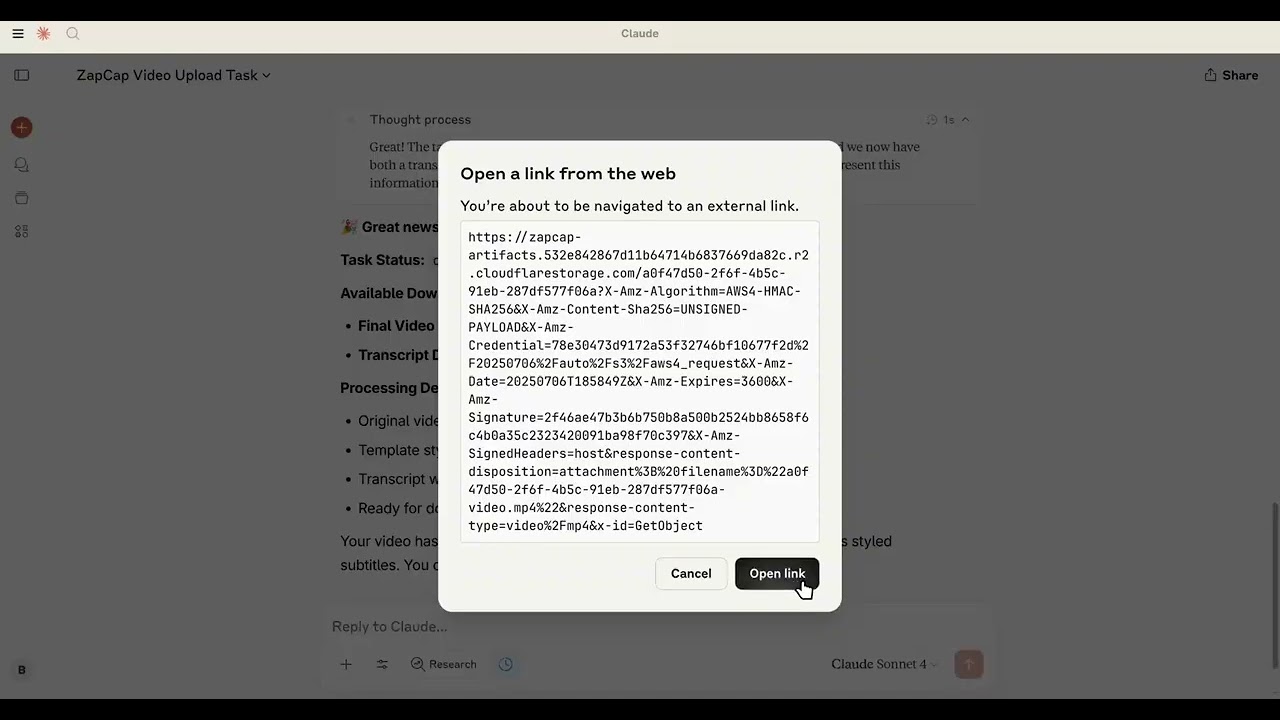

ZapCap MCP Server bridges the gap between AI assistants and video‑processing workflows by exposing the ZapCap API as a first‑class MCP service. Developers can upload raw footage, pull available rendering templates, and spawn fully custom processing jobs—all through a single, declarative interface that integrates seamlessly with any MCP‑compatible client such as Claude Desktop or Cursor. The server eliminates the need for manual REST calls, authentication handling, and file‑transfer logic, allowing AI agents to focus on higher‑level decision making while still controlling complex video pipelines.

The core value of this MCP lies in its ability to turn a cloud‑based media platform into an AI‑driven toolset. With just three simple commands—upload, list templates, and create task—an assistant can schedule a video to be transcribed, dubbed, or enhanced with B‑roll and animated subtitles. The server handles authentication via an environment variable, abstracts multipart uploads, and translates the JSON responses from ZapCap into structured tool outputs. This makes it trivial for developers to embed video manipulation in conversational agents, automated reporting bots, or content‑generation pipelines.

Key features include:

- File Upload Flexibility: Upload from disk or via a public URL, supporting large media files without manual chunking.

- Template Discovery: Retrieve all processing templates available in the user’s ZapCap account, enabling dynamic selection based on context or user preference.

- Task Customization: Configure a wide range of options—auto‑approval, language selection, B‑roll inclusion, subtitle styling (emoji, animation, keyword emphasis), and pacing—through a single tool call.

- Real‑time Monitoring: While not exposed as a separate tool, the underlying API provides task status callbacks that can be consumed by an AI for progress reporting.

Typical use cases span from automated video summarization bots that fetch raw footage, apply a “quick‑edit” template, and return an engaging clip, to content‑creation assistants that generate bilingual subtitles with animated emojis for social media posts. In educational settings, an AI tutor could upload lecture recordings and request a transcript‑with‑highlighted key terms task, then deliver the polished output to students.

Integration into existing AI workflows is straightforward: add a single MCP server entry to the client’s configuration, set the , and invoke any of the provided tools. The server’s declarative nature means that developers can write high‑level prompts like “Create a B‑roll enhanced summary of this video” and let the MCP handle all lower‑level API interactions. This reduces boilerplate code, improves maintainability, and opens the door for more sophisticated media automation powered by conversational AI.

Related Servers

RedNote MCP

Access Xiaohongshu notes via command line

Awesome MCP List

Curated collection of Model Context Protocol servers for automation and AI

Rube MCP Server

AI‑driven integration for 500+ business apps

Google Tasks MCP Server

Integrate Google Tasks into your workflow

Google Calendar MCP Server

Integrate Claude with Google Calendar for event management

PubMed Analysis MCP Server

Rapid PubMed literature insights for researchers

Weekly Views

Server Health

Information

Explore More Servers

Garc33 Js Sandbox MCP Server

Secure JavaScript execution in an isolated environment

MCP Knowledge Base Server

LLM‑powered Q&A with tool integration

Mcp Dichvucong

Real‑time Vietnamese public service data for AI assistants

MCP-Smallest.ai

Middleware for Smallest.ai Knowledge Base via MCP

Firebase Docs MCP Server

Serve Firebase docs via Model Context Protocol over stdio

ZIN MCP Client

Lightweight CLI & Web UI for MCP server interaction