About

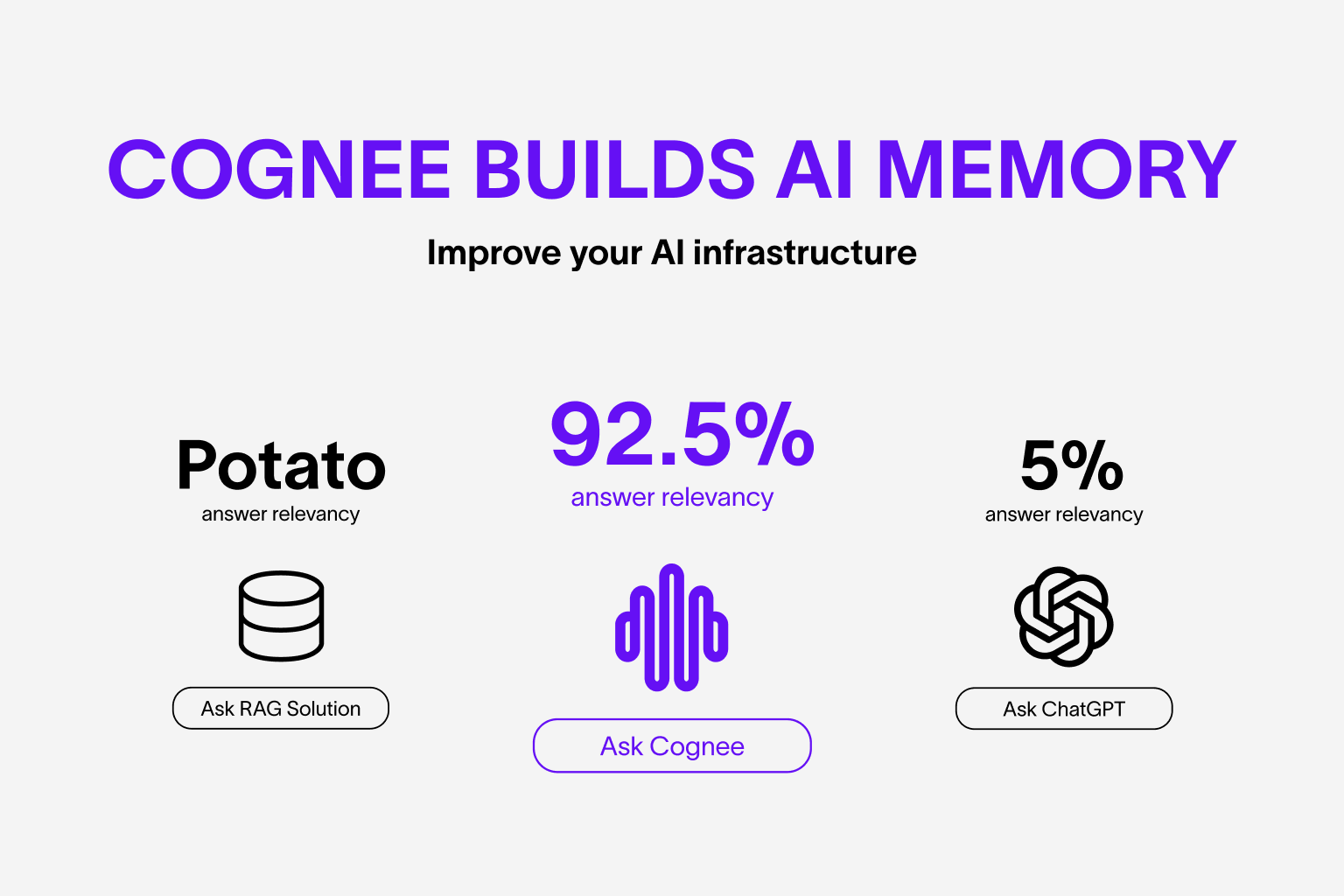

Cognee provides a modular, extensible memory system that replaces traditional Retrieval-Augmented Generation. It builds dynamic knowledge bases for AI agents using ECL pipelines, enabling fast, scalable context retrieval and knowledge management.

Capabilities

Cognee‑MCP addresses a fundamental challenge in modern AI applications: persistent, context‑aware memory for autonomous agents. Traditional retrieval‑augmented generation (RAG) pipelines rely on static document stores and costly query cycles, making it difficult for agents to retain knowledge across sessions or adapt quickly to new information. By exposing a Model Context Protocol (MCP) interface, Cognee turns its memory engine into a first‑class, pluggable service that any AI assistant—Claude, Gemini, or custom models—can consume as if it were a native tool. This abstraction lets developers shift from ad‑hoc data retrieval to structured, indexed knowledge graphs without changing the underlying LLM.

At its core, Cognee‑MCP implements a modular ECL (Extract, Cognify, Load) pipeline. The extract stage ingests raw data from diverse sources (web pages, PDFs, APIs) and normalises it into a consistent format. Cognify applies semantic parsing and entity resolution, turning unstructured text into richly annotated knowledge graphs. Finally, load persists the processed data in a scalable graph database, exposing it through MCP endpoints for querying, updating, and reasoning. This workflow not only replaces RAG but also offers fine‑grained control over how data is stored, retrieved, and updated—features that are invisible to the LLM but crucial for compliance, auditability, and performance.

Key capabilities of Cognee‑MCP include:

- Dynamic memory expansion: Agents can add new facts on the fly, with automatic deduplication and versioning.

- Context‑aware retrieval: Queries return not only matching documents but also their relational context, enabling richer reasoning.

- Fine‑tuned sampling: Developers can influence token generation by injecting retrieved knowledge directly into the prompt, reducing hallucination.

- Tool integration: The MCP surface exposes tools such as , , and that can be invoked from any LLM prompt.

- Multi‑language support: The underlying pipeline natively processes content in dozens of languages, making it suitable for global deployments.

Real‑world scenarios that benefit from Cognee‑MCP are plentiful. A customer support chatbot can maintain a live knowledge base of product updates, automatically incorporating new release notes as they appear. A research assistant can track citations and infer connections between papers, surfacing emergent trends without manual curation. In enterprise settings, compliance teams can audit an AI agent’s memory changes in real time, ensuring that sensitive data is handled correctly. Because Cognee‑MCP speaks MCP, all these use cases can be stitched together in a single workflow, with agents seamlessly moving between memory queries, external API calls, and LLM generation.

For developers building AI pipelines, Cognee‑MCP offers a standalone memory service that plugs into existing MCP‑compliant toolchains. By decoupling memory from the LLM, teams can scale storage independently, apply custom indexing strategies, and even swap out underlying databases without touching the assistant logic. This modularity, combined with its rich feature set and ease of integration, makes Cognee‑MCP a standout solution for building sophisticated, context‑rich AI agents that learn and evolve over time.

Related Servers

n8n

Self‑hosted, code‑first workflow automation platform

FastMCP

TypeScript framework for rapid MCP server development

Activepieces

Open-source AI automation platform for building and deploying extensible workflows

MaxKB

Enterprise‑grade AI agent platform with RAG and workflow orchestration.

Filestash

Web‑based file manager for any storage backend

MCP for Beginners

Learn Model Context Protocol with hands‑on examples

Weekly Views

Server Health

Information

Tags

Explore More Servers

Langchain Llama Index OpenAI Docs MCP Server

Quickly retrieve docs snippets for Langchain, Llama Index, and OpenAI

Airtable MCP Server

Seamless Airtable API integration for Claude Desktop

MySQL MCP Server

AI-Enabled MySQL Operations via MCP

UV Package Manager Server

Fast, all-in-one Python package and environment manager

Local Scanner MCP Server

AI-powered local web & code analysis tools

Things MCP Server

AI‑powered task management for Things 3