About

This lightweight TypeScript library transforms Model Context Protocol (MCP) server tools into LangChain StructuredTools, enabling easy integration with LangChain agents. It supports schema adjustments for LLM compatibility and tool invocation logging.

Capabilities

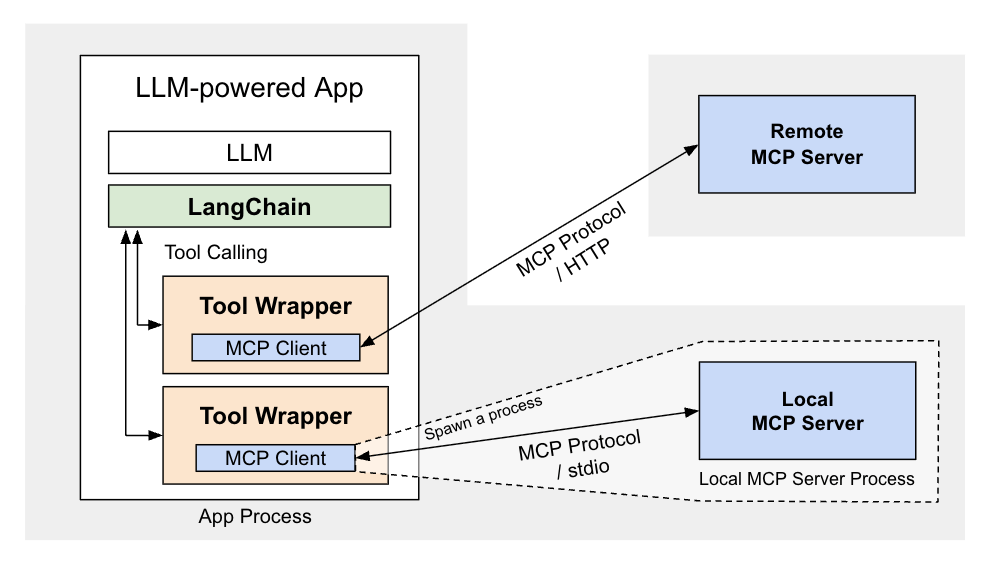

The LangChain MCP Tools TypeScript server is a lightweight bridge that lets developers expose the full power of LangChain’s tool‑calling capabilities to any AI assistant that speaks the Model Context Protocol (MCP). In practice, it turns a collection of standalone MCP servers—such as filesystem access, HTTP fetch, GitHub Copilot, or Notion integration—into a single, unified set of LangChain objects. This eliminates the need to write custom adapters for each external service and allows AI agents built with LangChain to invoke them as if they were native LLM functions.

What problem does it solve? Many MCP servers expose only a raw JSON schema for each tool, which can be cumbersome to consume directly from a LangChain workflow. Developers often have to manually translate those schemas, handle authentication, and stitch together tool outputs. The MCP‑to‑LangChain utility automates this entire pipeline: it launches each specified MCP server in parallel, pulls the available tool definitions, adapts their JSON schemas for compatibility with popular LLM providers (Google Gemini, OpenAI, Anthropic), and wraps them into LangChain instances. The result is a ready‑to‑use toolbox that can be dropped into any LangChain agent, chain, or workflow.

Key features include:

- Parallel server orchestration – All MCP servers are started concurrently, reducing initialization time.

- Provider‑aware schema transformations – When an LLM provider is specified, the library rewrites tool schemas to avoid common compatibility warnings or errors.

- Invocation logging – Every tool call is recorded, making debugging and audit trails straightforward.

- Cleanup callback – A single async function shuts down all MCP server processes when the agent finishes, preventing orphaned resources.

Typical use cases span from building a personal productivity assistant that can read and edit Notion pages, to creating an AI‑powered code review bot that fetches files from a GitHub repository and executes filesystem commands. In research settings, the library enables rapid prototyping of multi‑tool agents without plumbing each integration manually. Because it works purely through the MCP interface, any future tool that follows the protocol can be added with zero changes to the agent code.

In summary, the LangChain MCP Tools TypeScript server offers a plug‑and‑play pathway for developers to enrich their AI assistants with external capabilities, all while keeping the codebase clean, maintainable, and LLM‑agnostic.

Related Servers

n8n

Self‑hosted, code‑first workflow automation platform

FastMCP

TypeScript framework for rapid MCP server development

Activepieces

Open-source AI automation platform for building and deploying extensible workflows

MaxKB

Enterprise‑grade AI agent platform with RAG and workflow orchestration.

Filestash

Web‑based file manager for any storage backend

MCP for Beginners

Learn Model Context Protocol with hands‑on examples

Weekly Views

Server Health

Information

Tags

Explore More Servers

APIMatic Validator MCP Server

Validate OpenAPI specs with APIMatic via MCP

Phone Carrier Detector MCP Server

Fast, memory‑based Chinese mobile number lookup

n8n MCP Server

Seamless n8n workflow management for AI agents

Azure Revisor MCP Server

Automated code review for Azure pull requests

Weik.io MCP Server

AI‑powered integration assistant for Weik.io

Terminal Tool MCP Server

Execute shell commands and fetch remote files via MCP