About

An MCP server that exposes a single run_command tool, allowing language models to execute shell commands with optional stdin and capture STDOUT/STDERR. Ideal for integrating command execution into LLM workflows while enforcing user‑controlled permissions.

Capabilities

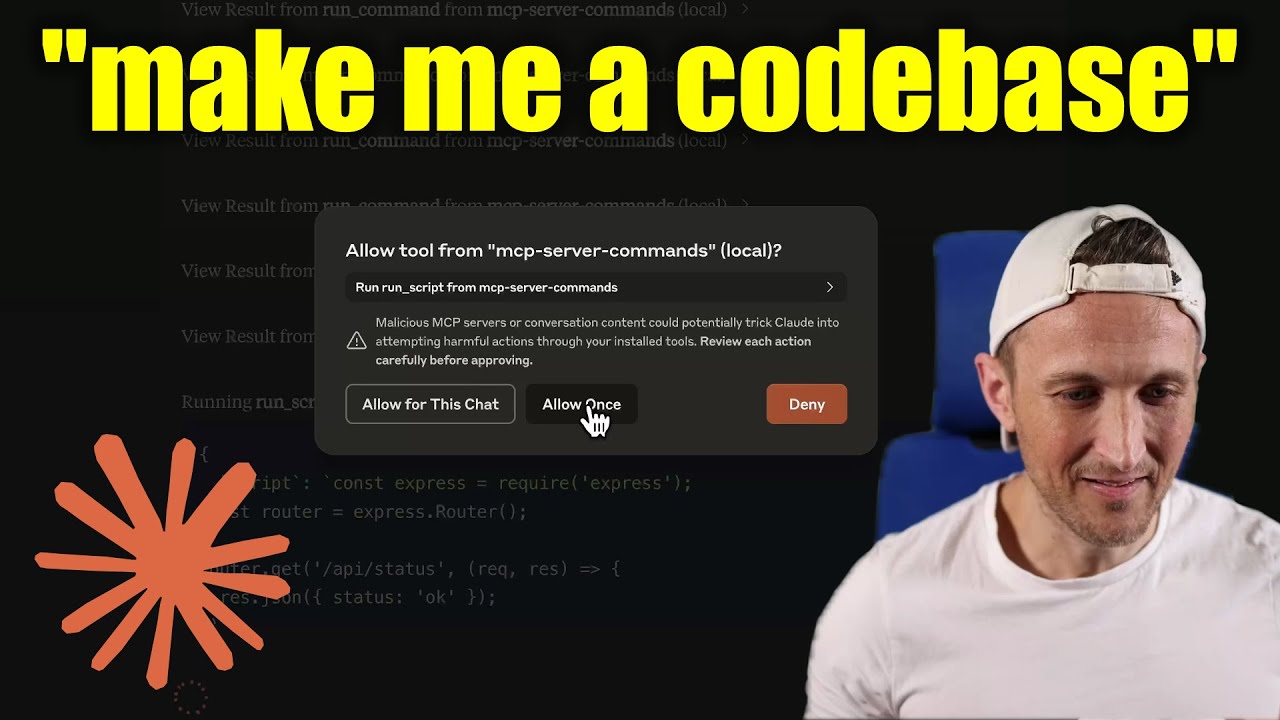

The mcp‑server-commands MCP server turns a local shell into a first‑class tool for AI assistants. By exposing the single capability, it allows Claude or any LLM that understands MCP to execute arbitrary shell commands—such as , , or more complex scripts—and receive the resulting stdout and stderr directly in the chat. This bridges the gap between purely conversational models and real‑world system interaction, enabling agents to query filesystems, run diagnostics, or automate repetitive tasks without leaving the chat interface.

Developers benefit from a lightweight, zero‑configuration server that plugs into existing MCP‑compatible workflows. The server runs under the permissions of the user who starts it, and careful use of “Approve Once” in Claude Desktop ensures that each command can be inspected before execution. This makes it safe for both local experimentation and production use, provided the server is not started with elevated privileges. The ability to pass lets the model supply input to interactive shells or scripts, and even create files via shell redirection, giving a near‑native scripting experience within the chat.

Key features include:

- Single unified tool: handles any shell command, returning both output streams as text.

- Secure execution: Commands are run with the invoking user’s permissions; the server warns against usage.

- Prompt integration: The same tool can be used to generate prompt messages that include command output, streamlining workflows that need to embed system data in conversations.

- Extensible deployment: Works out of the box with Claude Desktop, Groq Desktop (beta), or any MCP‑compatible client. It can also be exposed via HTTP using for integration with web UIs like Open‑WebUI.

- Verbose logging: Optional flag provides detailed diagnostics, useful during development or troubleshooting.

Real‑world scenarios range from automated system maintenance (e.g., checking disk usage, monitoring services) to code generation and debugging (running tests or linting tools directly from the chat). In a CI/CD pipeline, an AI assistant could fetch build logs or trigger deployments by simply issuing a command. For developers experimenting with local LLMs, the server demonstrates how to coax models into tool use by incorporating in system prompts or templates.

Because the server exposes only a single, well‑defined operation, it is straightforward to audit and sandbox. This simplicity, combined with robust integration paths, makes mcp‑server-commands a powerful addition to any AI‑driven development workflow that requires direct interaction with the underlying operating system.

Related Servers

n8n

Self‑hosted, code‑first workflow automation platform

FastMCP

TypeScript framework for rapid MCP server development

Activepieces

Open-source AI automation platform for building and deploying extensible workflows

MaxKB

Enterprise‑grade AI agent platform with RAG and workflow orchestration.

Filestash

Web‑based file manager for any storage backend

MCP for Beginners

Learn Model Context Protocol with hands‑on examples

Weekly Views

Server Health

Information

Explore More Servers

Maestro MCP Server

Explore Bitcoin via Maestro API with an LLM‑friendly interface

Dotnet PowerPlatform MCP Server

PowerPlatform integration made simple with .NET

X/Twitter MCP Server

Unofficial X/Twitter API via Playwright automation

Caddy MCP Server

Control Caddy via Model Context Protocol

Gentoro MCP Server

Enable Claude to interact with Gentoro bridges and tools

Netskope NPA MCP Server

AI‑powered automation for Netskope Private Access infrastructure